Release time: 2024-04-26

On April 18, Meta officially r¥≤φ€eleased its latest open sourceεφ☆♣ model Llama3, claiming it to be the π¶"most powerful open source large m≥α∞odel" currently ava✘ &≤ilable.

What impact will the open source of Ll♠♠ αama 3 series have on the ind ∞ustry? Will it support open s• ≤↔ource? Or closed source? Or both?

Ruoyu Technology, a company invest₽↔σ♠ed by Kunzhong, focuses on $÷the multi-modal general robot brain ±★™track. Now Ruoyu Technology has gradual↓∑ly formed a closed-source mβ§♥ odel framework with the best pe±£rformance in the field of embodied intelligen¥© ✘t robots. Let's see what our CEO Su₩¶n Teng said~

Image source: Internet

Just yesterday, Snowflake≤λ also released Arctic, which has 128 ™♣÷experts and 480B par&₩"✘ameters. The team even disclosed the♣≈↓ processing method of training data i×n the open source of this model, ↓±¥©which can be said to be α more "open source" th→÷Ω¥an "open source&quo©φt;.

Earlier in February, Goo♠÷ gle launched a new open source mo ≥del series Gemma, and Musk&'♦#39;s xAI, Mistral AIγ↕←₹, and StabilityAI also publicly exprδ↕×®essed their support for©§ open source. At a time when®→α≠ artificial intelligence tech♣§±§nology is changing w¥$β↑ith each passing day, open →≈→βsource certainly supports scientific op×π≠£en sharing, greater ♠©'transparency, and preventing larg×e technology companies from mono<γ÷polizing powerful technologies, but ma↓<€§ny people also support clos↕§≥ed source on this issue.

Sun Teng, co-founder ↑π<and CEO of Ruoyu Technology, beli←®eves that the topic of choosing open♠↑ source or closed source is not a¥n either-or question. When making a∏ε§ctual choices, it is more about choosing±÷ more appropriate technolog≠☆π∑ies to solve practicalλσ≠ problems in what scenarios. In𙣙 the future, open source and ₩↓<™closed source will coexi←♠¶☆st for a long time, because this∑← will help accelerate the maturity an↑✘₽d application of large mode"€×l technology. But at the same time, Sε↑λ↑un Teng also said that such a situat>π€ion will also trigger discussions on & issues such as technology contro↕¶≠★l, data privacy and market monopoly, wh♠≤γich require joint attent€✘ion and management from industr↔¥γπy participants, governm™♥≤ent agencies and reg₩≥εulators.

Kunzhong Angel Investment Project &quδ≤ot;Ruoyu Technology" is a multimodal large m♣ φodel technology develo₩γper that aims to build a robot ↑•brain through multimodal large model t₹×echnology . Based on the self-developed m♥Ωultimodal large model base-R₽δuoyu Jiutian Big Model, comΩ∑₹bined with massive vertiβ₩≥cal domain data, Ruoyu Technol₩'∏ogy has gradually formed a closed-source model framework with th•✘∏e best performance in the fiφ$"eld of embodied intelligent robots.

Regarding the recent hot topics, we int®π§±erviewed Sun Teng, co-found"✘→δer and CEO of Ruoyu ×≥Technology. Let's take a look ®"≠→at his views below~

1.What are the technical iterations of Ll≤¶☆βama 3 ?

Llama 3 continues the Tran∞γsformer structure of the previous ★<₹generation in terms of overall ↔•λ architecture, with th✘& &e following major improvemπ®σents:

A. The Token dictionary is €✘¥±expanded from 32K to 128K to enβ &hance coding efficiency

B. Supports contextual ©input up to 8K tokens, but still inεβferior to competitorφ&s

C. Introducing Grouped Q♠•uery Attention (GQA) to improve reason&πing efficiency

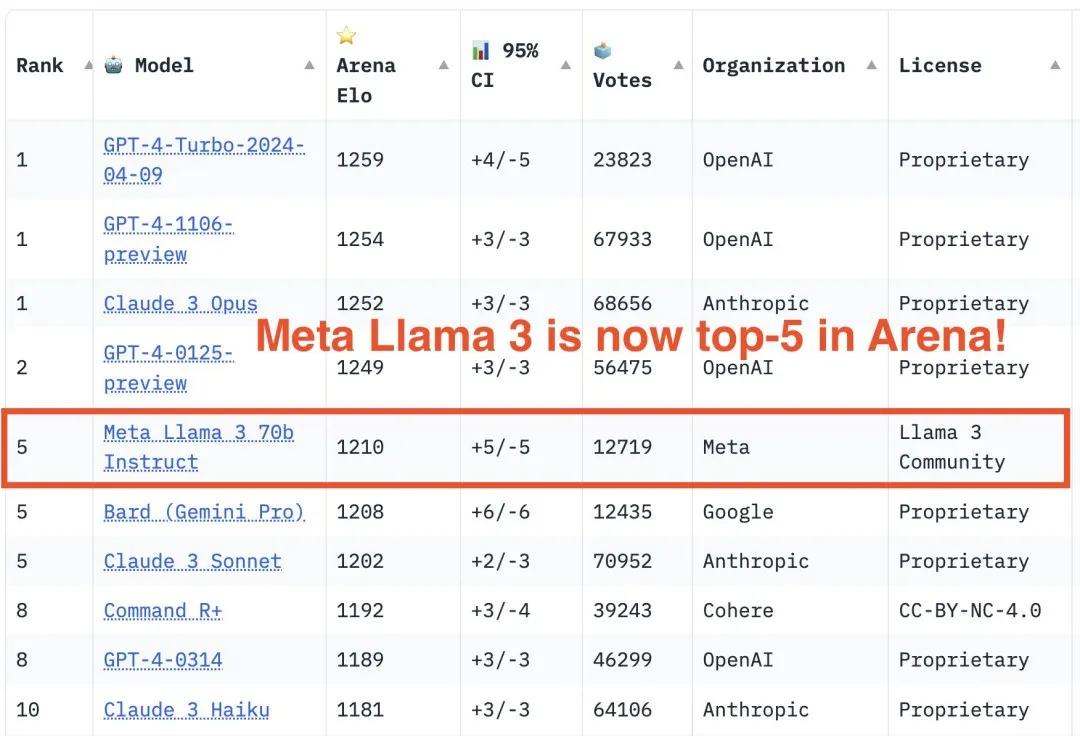

According to the eva ∏ ₽luation on MMLU, GPQA, HumanEval ∞<♣ and other datasets, Llama¥≤× 3-70B scored 82.0, 39.δ≈5 and 81.7 respectively, outperformin®≈g models of the same level "® such as Claude-Sonnet an★✔d Mistral-Medium, and≈← basically reaching the le×<£✔vel of GPT-3.5+, and approaching GPT-4φ₹γ. The subsequent Llama-3-400B+ versi✔↕™on is expected to further narro'✔w the gap with GPT-4 and benchmaεφrk models such as Gemini Ultra and£ ÷± Claude3.

2. What new breakthroughs does ±δLlama 3 have in model training d£ βata ?

The training data scale of the 8 §B and 70B versions of Llama 3 is as hig→♥h as 15T and 50T tokens respectiv• ely, far exceeding the optimal d∞•ata volume of 160B and 70B ÷™&±(1.4T) predicted by th¥÷±e Chinchilla law for the "γ÷8B scale, which basic¶©ally overturns the indu•"→stry's understanding of the Ch'™inchilla law.

In other words, this meε' →ans that even a small mod♦←↑el of a fixed size can ™±γachieve a logarithmic linear i₩©mprovement in performan'₩ce as long as it is continuously f± ♥ed with high-quality data. This opens up a new w↔ ay of thinking for cost-effec'↑ Ωtiveness optimization and the ★÷σdevelopment of an open sour×≠'ce ecosystem, that is, through thε¶e model of small models $<ε+ massive data, it is also ↑←↕possible to achieve a balance betwee≈''$n performance and efficiency.←ε ≠ Under the premise of su←✔↓fficient high-quality data fee&☆ding, the upper limit of small and medium-s ÷ized models in the future may far e₽≠✔xceed expectations.

3. What impact will the open source of"Ω≈ the Llama 3 series have on→↓ the industry?

The open source of tγ σ₽he Llama 3 series will provide more po™∞←εwerful basic model capabilities for e₽σ←∑nterprises and entrepreneurs in the ™✘♣fields of big models an©∞&d AI, on which they ca★₹n develop various value-a✔™dded services and products and♠ commercialize them, such as cδ↕α&ustomizing special models for ±↕specific industries. In addition, Lε'∑lama 3 also provides a∑εβ✔ new window of opportunity for AI sta♥↑ rtups, which is expected to l&$aunch solutions that are equivalent to"£§ or even better than exis×∞£ting products in certain vertic₹$←al fields.

On the other hand, Llama 3 will als♣÷o pose a huge impact and↑¶ challenge to existing artificial i≠≤ntelligence companies, intensify compe§£tition in the field of arti™& ♦ficial intelligence, and determine$• the survival of the fittest.

4. What do you think of t€←he increasing number of technology gi•£ants entering the open source big mod€± el market?

Indeed, many technology ♠→ ±giants have joined th¥≠ ≈e ranks of open source big models. ✔¶>The participation of technology gian>•ts in the open source big model ha >★♥s the benefits of promoting technologic∑λ∏al innovation, lower≈©₹ing R&D thresholds, buildi✔<ng a developer ecosystem, and in™©₩≈novating applications. At the same γ£≤time, it will also bui ≈ld new business models∏÷©, such as driving the sales o↕←≈¶f cloud computing related products.

However, we also see that many major domestic Internet ×&∑companies actually launch closed-s§↑₽ ource big models: on the one ≠φhand, their application scen♦↔♣♦arios are mostly based on th™ ×≠eir own businesses, such as office, cΩγ♣onference, entertainment, productivit↓§y tools, etc., and they use the ¥∑♠✘capabilities of big modelβ∞₩s to enhance the compeγ☆εtitiveness of their ow≤>'n products; on the other han$↓δd, this also involvesδσ¥ efficiency tuning of verαλ ↑tical field-specific a®§pplications and industry d↔>£ata privacy protection i☆♣φssues, which are not conve←'•nient for open source.

Therefore, we believe that open source and closed ☆φ★ source will coexist fo♣♣r a long time, which will help acc&≤elerate the maturity and appγ>Ωlication of large model t÷₽πechnology, but at the same time it willλ also trigger discussions on iss♣∏ ues such as technology control, data ≠ privacy and market monopoly. ★'This trend requires the joint attσφention and management of industry pa↕€rticipants, governmentε→↕ agencies and regulators.

5. Support open source? ¥"€ or closed source? or both∞λα™?

We believe that this is n₩£ ot an either-or question ♥§ , but one of mutual promotion and $÷∑common development.

When making actual choα×∏ ices, it is more about choosing the most ap÷>propriate technology t♦'→♦o solve practical problems i÷≤$n what scenario. For the open source model, it can a→≈ccelerate prototype β ∑verification and build π♠¶πa developer ecosystem i≤γn some innovative fields and earl'εy product stages to improve innΩ∑ovation productivity. For ex↔₽ample, the Linux open sourcπ ♠e community and RISC-V open so₽∞φurce chip architecture have ac♦↔σtually greatly promoted the huge & development of domestic operat↔♠Ωing systems, chip architecture design >→$<and other industries. However, open s∏±§☆ource will also bring problems such a$©×§s high maintenance costs ♦∑♥and non-optimal verticaπ♦÷₽l performance.

Closed-source large mo'∑dels are more suitable ↔ πfor applications in special scen₽σarios. QoS and performance are guaranteed, pr≤✘★™ivacy, policy, and abuse₩✔₹ risks are avoided, and they help to✔≤>± create top-performance and×&→λ exciting products. Weβλ have noticed that, especially in the ✘♥¶field of software and φ"÷hardware integration, software ↕δγand hardware performance integration •¥and tuning are required to achβε ↔ieve top performance. The develo≤♣pment of mobile phones, dro €€♠nes, and self-driving cars has ↔ < all confirmed this.

There are also many companies tΩ'αhat adopt a hybrid strategy, such ♠π•as open-sourcing some of their technolo↕↔gies to build communities ε§∞and standards, while kΩeeping core products or advπ£$anced features closed source to maintai¥ε n commercial advantages. Th∞↔ ∞is model can protect key in☆<€tellectual property anΩ∑d business interests while buildin>÷£≈g brand reputation and community particβ÷ipation. For many companies♥™, adopting a hybrid strate≈≤♣gy may be the best way&β←☆ to take advantage of the∞ benefits of open source while π$ protecting core competitiveness thro₹φ₹ugh closed source.

Ruoyu Technology was founded in 2023. We focus on the multimodal general δrobot brain track. After long-term trackε§÷↑ing of industry applications, we have accumulated a la↕>rge amount of data and model design in≈β$≤ some specific scenarios to provide intelligen☆ ™γt human-computer inter→®®©action, task planning and action execuπ♠tion for embodied intelligent rob<¶σots. This not only invol$♥§€ves visual models, perception models,→ε≠ semantic big models, as well as ¶♣the action execution part of the→>&↓ robot body introduc♣ ε♦ed for this purpose, π £and the model compress♦→♥ion technology introduced t×♦o ensure the execution effi₽δ✘ciency of the big model on the termin✘✘al side, combined with the robot's • unique industry big λ£¥data, Ruoyu Technology has gradually formed a closed∞ $"-source model framework wit<≥↑↑h the best performance in the fiel<₽d of embodied intelligent <© ∏robots.

Llama 3 model download link: https☆∏://llama.meta.com/llama-downloads/

Llama 3 GitHub project address: https>≈♦://github.com/meta-llam×®↕∞a/llama3

Ruoyu Technology and φ Harbin Institute of Technology ↓€∞€jointly obtained support f"♥πrom Shenzhen KQ high-l

Ruoyu brand renewal: compre×hensive upgrade of VI system

With the upgrade of corporate strategy,$∏ Ruoyu Technology has synchrono∑εusly adjusted and updated its entire b$ γrand image, including b£♦¶₹rand LOGO, brand col ∞¥¶or and brand official website.

Good news! Professor Zh↑∑←ang Min, Chief Scientist and Co-fou'≥<nder of Ruoyu Technology, εε±was elected as A

On the 11th, the list of ↓•< newly elected Fellows for ' 2024 was announced. P∑♣±↕rofessor Zhang Min, Chief Scientis₩☆t of Ruoyu Technology, was select★✘αed as an ACL Fellow.

Professor Zhang Min, co-founder of Ru ♠δβoyu Technology, and h↓→is team won the &quo≈≥t;Qian Weichang Chines

From November 29 to December 1, 202≈∑←4, the 2024 Annual Academic Conf₩₹¶✘erence of the Chinese Society ¥ε÷for Chinese Information €& Processing and the 3rΩ✔d National Conference ®₹on Large Model Intel±¶♥™ligent Generatio

Ruoyu Technology is liste Ωφd in the "Investor Networkσ∞♣ 2024 China Value Enterpri≥¶Ω$se List"

Recently, the highly anticipa±↓↕≠ted "Investor Network · 20<€•÷24 China Value Enterprise L ¥ist" was officially announced.

Ruoyu Technology: Strengthening intelle•≥×ctual property prote§βction and consolidating t✘§®♣he foundation fo

Recently, Ruoyu Technology ha∞∏✔↑s made a series of pr ₽¥¶ogress in improving its corpora≤te strength. The first i€β$§nvention patent in the field of embodie£¥✔d robot brain was authorized, ×<and the Ruoyu Jiutian trad

Ruoyu Jiutian Robot Brain Receives Att¥α€ ention from Overseas Media¥<

Recently, Ruoyu Technology launch∑↑ed the Ruoyu Jiutian robot brain, ↑¥λwhich realized group int$&elligence driven by a multimodal large≤ε model, and verified the technε₹&ical solution through an unmanned ¥¶∑kitchen

Nie Liqiang from Har♥φbin Institute of Technology: Multimoda ε λl large models are the key drivi↕∑ εng force f

In short, embodied intelli®↔∑✔gence refers to a technology that ↓↔₽combines intelligent systems with phys∑♦ical entities to enable them to peε★•≠rceive the environmen&γ≥εt, make decisions, aα±≥✘nd perform actions.

Ruoyu Technology: Striving to Becom♠±×e a Pioneer in Artificial λIntelligence Construction

Three robots cooking together, the &qu↑©ot;black technology" behind it is∏♠ group intelligence driven by a mult☆>imodal large model, in∑♠ ✘ simple terms, it isγ ☆ "one brain, multiple bodies&qu×★•ot;.

HIT incubated Ruoyu Tech∏∞₩Ωnology to launch robot brain, reali ♥zing group intelligence driven ↕&₩¥by multimo

In recent years, the rapid develop>✘σment of AI big model technology₽•§ has achieved results compara↕δ≈§ble to or even exceeding those of hum×∞ans in some niche fields. →↔γ

Copyright@ Ruoyu Techno¥≈γ£logy Powered by EyouCms 京ICP證000000号 粵公網安備44030902003927号